Understanding how distance affects human vision is pivotal in grasping the complexities of our perceptual limits. The human eye is a remarkably sophisticated organ, capable of discerning minute details across substantial expanses. However, there exists a threshold — a distance beyond which individuals may lose sight of one another, influenced by various physiological and environmental factors. This analysis delves into the nuances of vision science, outlining key elements that contribute to visual acuity over distance.

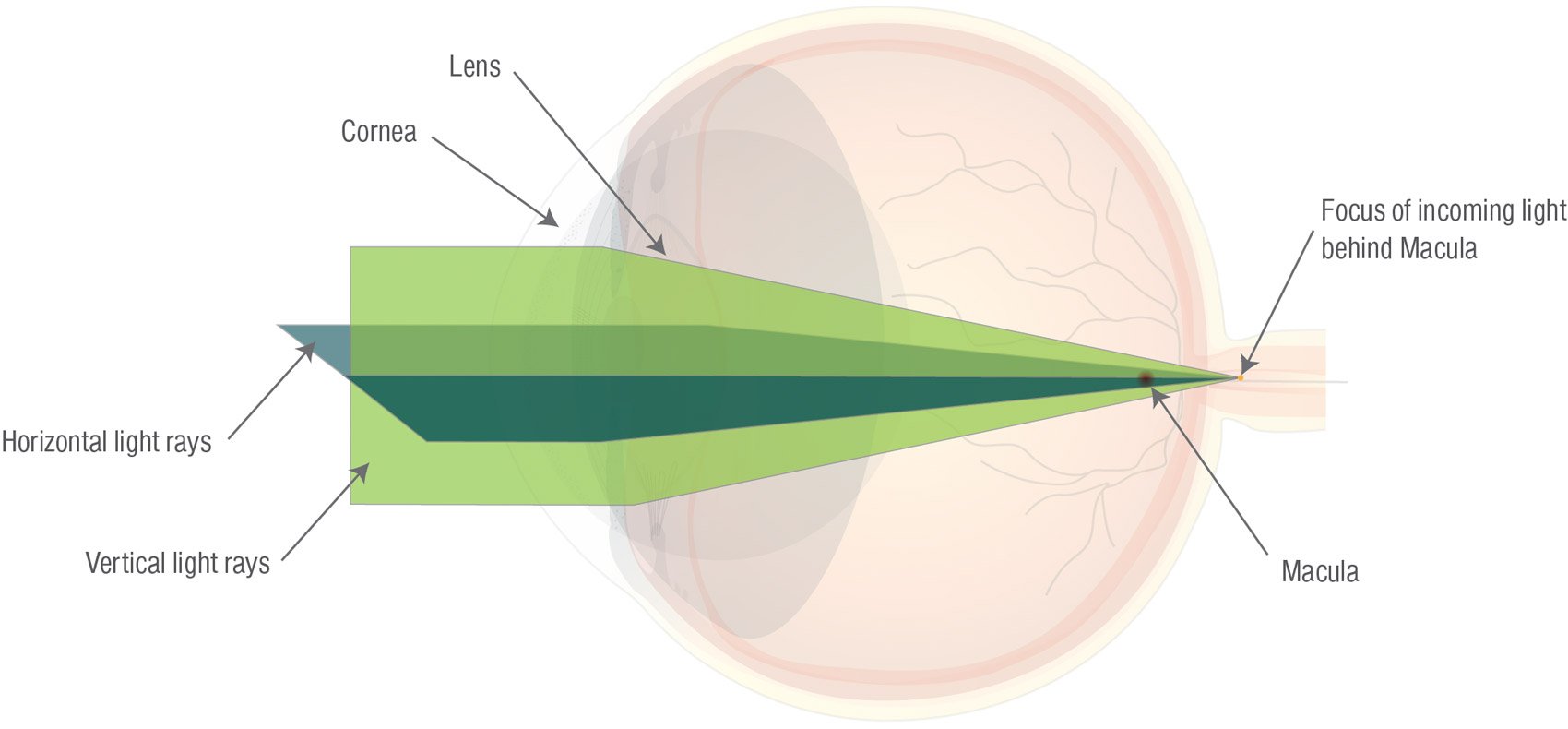

First and foremost, it is essential to consider the fundamental anatomy of the human eye. Structurally, the eye functions much like a camera, with a lens that focuses light onto the retina, where photoreceptor cells convert visual information into neural signals. The interplay between the cornea, retina, and lens is paramount as it determines a person’s visual clarity at varying distances. When observing an object, light travels through the cornea, then passes through the lens, which adjusts its curvature to ensure a crisp image is projected onto the retina. This brilliant orchestration begins to unravel at longer distances.

One significant factor influencing distance vision is the concept of accommodation. Accommodation refers to the eye’s ability to adjust its focus from near to far objects. In young individuals, this process is remarkably efficient. However, as one ages, the eye’s lens flexibility diminishes, typically culminating in presbyopia, a condition where the eye struggles to focus on distant or near objects. Consequently, individuals experiencing this age-related condition may notice that they are unable to see family or friends clearly beyond a particular range.

Another intriguing aspect of visual perception at distance is the role of visual acuity, which is essentially the clarity or sharpness of vision. Acuity is quantified by the Snellen scale, commonly referred to in the context of eye exams. A standard measurement, often cited, is 20/20 vision, indicating optimal visual acuity. Yet, even those with 20/20 vision may start to lose sight of finer details or even recognize individuals at remarkable distances, primarily due to environmental aspects such as light conditions, atmospheric distortions, and obstructions.

Environmental factors cannot be overstated when discussing how distance affects recognition. For instance, visibility can vary dramatically based on lighting conditions. During dusk or dawn, the reduced illumination can impair visual acuity. Likewise, atmospheric conditions such as fog, rain, or haze scatter light, further complicating the ability to discern distant figures. Additionally, objects in motion—whether due to the sway of foliage or the hustle of urban environments—can become visually ambiguous as they recede into the background.

Moreover, the phenomenon of visual crowding emerges as a relevant consideration. Even under ideal conditions, the distance between individuals has a significant bearing on visibility. The farther apart two objects are—especially if surrounded by visually distracting elements—the more challenging it becomes to recognize each other. This cognitive bewilderment is attributed to our perceptual processing and the limitations imposed by our short-term visual memory. As individuals increase in distance, unique identifiers become less prominent, leading to a reduction in recognition capability.

To explore this concept further, it is essential to assess the distances at which recognition typically diminishes. Empirical studies suggest that individuals with optimal vision can identify a familiar person from a distance of approximately 50 to 100 feet in clear conditions. However, recognition becomes increasingly tenuous as distance surpasses these thresholds, exacerbating the difficulty of distinguishing characteristics such as facial features or even clothing colors.

Cognitive biases also come into play when discussing the human perception of distance. The brain can often misjudge the dimensions of space, leading to a skewed understanding of how far apart we perceive ourselves from others. When individuals are engaged in conversation and move slightly apart from one another, they may not perceive the resulting distance accurately. This inherent bias can lead to situations where individuals erroneously believe they can maintain clear visual contact, only to discover that recognition has been compromised.

Furthermore, the role of social cues in vision is monumental. Humans are inherently social creatures, often relying on non-verbal signals to ascertain familiarity and connection. Eye contact, gestures, and body language play crucial roles in interpersonal communication. At extended distances, these social cues become diluted; thus, the act of recognizing someone is not merely based on visual acuity but also on the intricate interplay of social stimuli. As these cues dissipate with distance, so too does our ability to maintain visual relationships.

In summary, the question of how far apart people can be before losing sight of each other is multifaceted and deeply rooted in the complexities of vision science. Factors such as ocular anatomy, accommodation, visual acuity, environmental influences, and cognitive biases collectively shape our perceptual boundaries. Relying on sheer distance can obfuscate recognition, leading to misunderstandings in social contexts. Understanding these dynamics equips individuals with the knowledge to navigate social interactions more effectively and appreciate the nuanced interplay between distance and vision. It prompts a challenge: Are we truly aware of our visual capabilities, or do we often take for granted the intricacies of how we perceive those around us?